Space Grade Communication Delay Training

Developing Delay-Tolerant Protocols and Procedures

Understanding the Need for Delay-Tolerant Protocols

In today's interconnected world, delay-tolerant networks (DTNs) have emerged as a critical solution for environments where standard network connectivity falters. Whether it's disaster zones with compromised infrastructure, deep-space communications, or remote sensor arrays, these networks face unique challenges. What makes DTNs fundamentally different is their ability to function without constant connectivity, storing and forwarding data as opportunities arise. This capability demands entirely new protocol architectures that don't assume instantaneous end-to-end connections.

Unlike conventional networks that rely on persistent links, DTNs operate more like a digital relay race. Information gets passed from node to node, sometimes waiting hours or days for the next connection. This store-and-forward paradigm requires rethinking everything from data packaging to delivery confirmation systems.

Key Challenges in DTN Architecture

Designing for unpredictable delivery timelines presents unique obstacles. Engineers must create systems that not only withstand prolonged storage periods but also intelligently prioritize data based on both urgency and expiration timelines. A weather alert in a disaster zone loses all value if it arrives after the storm has passed, making temporal relevance a core design consideration.

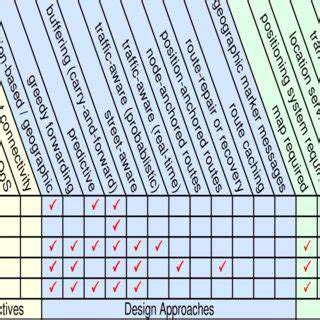

Routing presents another complex puzzle. Without continuous connectivity, traditional path-finding algorithms fail. Effective solutions must account for probabilistic node encounters, varying transmission windows, and the dynamic nature of mobile networks. Some innovative approaches even incorporate social network patterns when nodes represent personal devices carried by humans.

Innovative Data Management Approaches

At the heart of every DTN lies its data handling methodology. Nodes must function as intelligent custodians of information, making autonomous decisions about what to keep, what to forward, and when to discard data. This requires sophisticated caching algorithms that consider factors like storage limits, data freshness requirements, and predicted future connectivity.

Some systems implement hierarchical storage, keeping critical data in protected memory while allowing less urgent information to be purged when space runs low. Others employ predictive models to anticipate which nodes are most likely to reach the final destination soonest.

Evolution of Routing Methodologies

The routing algorithms powering DTNs represent some of the field's most creative engineering. Unlike their internet counterparts that assume stable paths, these solutions embrace uncertainty. Modern approaches might combine elements of epidemic routing (flooding copies through the network) with sophisticated encounter prediction models to optimize delivery probabilities.

Some military and space applications even incorporate mission plans into routing decisions - if we know a satellite will pass over a ground station in 6 hours, we can schedule transmissions accordingly. This blending of scheduling and routing opens new dimensions in network planning.

Redundancy as a Reliability Strategy

In environments where single-point failures could mean permanent data loss, redundancy transforms from luxury to necessity. Strategic replication creates multiple independent paths for critical information, dramatically increasing the odds that at least one copy reaches its destination. This approach proves particularly valuable in humanitarian scenarios where communication breakdowns could mean life-or-death situations.

Advanced systems implement controlled flooding - creating enough copies to ensure delivery without overwhelming limited network resources. The balance between reliability and efficiency becomes a key optimization parameter.

Security in Intermittent Environments

Protecting data that might spend days moving through untrusted nodes presents unique security challenges. Traditional encryption models often fail when messages get stored for extended periods, requiring new approaches to key management and authentication. Some systems implement hash-and-carry techniques where each forwarding node adds its own cryptographic layer.

Particularly sensitive applications might employ data aging mechanisms that automatically degrade information quality over time or distance from source, ensuring that even compromised data has limited usefulness to adversaries.

Continuous Improvement and Future Considerations

Cultivating Organizational Adaptability

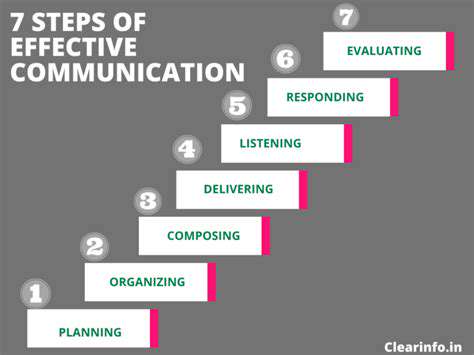

In an era of rapid technological change, the ability to evolve has become organizations' most valuable asset. Continuous improvement represents not just a methodology but a cultural transformation. Forward-thinking companies now view adaptation as a core competency rather than an occasional necessity.

The most successful teams institutionalize learning, building reflection and adjustment into their daily rhythms. This might take the form of regular retrospectives, innovation time allocations, or cross-functional knowledge sharing sessions that surface improvement opportunities organically.

Systematic Opportunity Identification

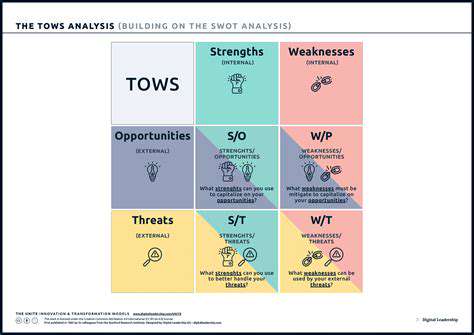

Effective improvement begins with honest assessment. Rigorous process analysis often reveals that 80% of inefficiencies stem from just 20% of operations - the key lies in accurately identifying these leverage points. Modern diagnostic tools range from value-stream mapping to sophisticated process mining software that analyzes digital footprints.

Some organizations employ waste walks where teams physically trace work flows looking for bottlenecks. Others use customer journey mapping to identify pain points from the end-user perspective. The common thread is structured, evidence-based evaluation.

Strategic Implementation Frameworks

Turning insights into action requires disciplined execution. The most effective improvement initiatives combine clear ownership with cross-functional collaboration - what some call the team of teams approach. Piloting changes on small scales before organization-wide rollout allows for refinement while minimizing disruption.

Agile methodologies have proven particularly effective here, with their emphasis on iterative development and frequent feedback loops. The key is maintaining momentum while remaining flexible enough to incorporate new learning.

Metrics That Matter

What gets measured gets improved, but choosing the right metrics proves critical. Leading organizations balance lagging indicators (like cost savings) with leading indicators (like process cycle times) to create a complete performance picture. Advanced analytics now allow real-time monitoring of improvement initiatives.

Some companies implement improvement scorecards that track both quantitative results and qualitative factors like team engagement. This holistic view prevents gaming of metrics while sustaining motivation.

Anticipating Change Waves

In today's volatile markets, adaptability has become the ultimate competitive advantage. Organizations that build scenario planning into their DNA can pivot faster when disruptions occur. Some maintain dedicated foresight teams that monitor emerging trends and run what-if exercises.

The most resilient companies develop optionality - maintaining multiple potential paths forward rather than committing to single trajectories. This approach reduces risk while preserving opportunities.

Technology as an Enabler

Digital tools have transformed improvement methodologies. From AI-powered process optimization to collaborative platforms that break down silos, technology amplifies human ingenuity. Automation handles routine tasks while employees focus on higher-value analysis and innovation.

Cloud-based analytics platforms now allow organizations to benchmark performance against industry peers, while digital twins enable risk-free simulation of process changes before implementation.

The Feedback Imperative

Continuous improvement thrives in cultures of psychological safety. When team members feel empowered to voice concerns and suggest alternatives without fear, organizations unlock their full innovative potential. Structured feedback mechanisms like regular pulse surveys complement informal channels.

Some forward-thinking companies implement failure forums where teams analyze setbacks without blame, extracting lessons to fuel future success. This reframing of mistakes as learning opportunities creates resilient, adaptive organizations.

Read more about Space Grade Communication Delay Training

Hot Recommendations

- AI for dynamic inventory rebalancing across locations

- Visibility for Cold Chain Management: Ensuring Product Integrity

- The Impact of AR/VR in Supply Chain Training and Simulation

- Natural Language Processing (NLP) for Supply Chain Communication and Documentation

- Risk Assessment: AI & Data Analytics for Supply Chain Vulnerability Identification

- Digital twin for simulating environmental impacts of transportation modes

- AI Powered Autonomous Mobile Robots: Enabling Smarter Warehouses

- Personalizing Logistics: How Supply Chain Technology Enhances Customer Experience

- Computer vision for optimizing packing efficiency

- Predictive analytics: Anticipating disruptions before they hit