Data Lakehouse Architecture for Advanced Supply Chain Analytics

Data lakehouses are rapidly emerging as a crucial technology for supply chain insights. They represent a significant departure from traditional data warehousing approaches, offering a more flexible and scalable architecture for storing and processing vast amounts of data. This shift allows businesses to leverage a wider range of data sources, from transactional records to sensor data and external market intelligence, to gain a more comprehensive understanding of their supply chain operations. This flexibility is paramount in today's dynamic and complex global supply chains.

Unlike data lakes, which often suffer from a lack of structure and queryability, data lakehouses combine the benefits of both data lakes and data warehouses. They provide a centralized, structured environment for data storage, while still accommodating the diverse, often unstructured, nature of supply chain data. This hybrid approach is critical for enabling efficient data analysis and reporting, leading to more informed decision-making.

Enhancing Supply Chain Visibility with Data Lakehouses

A key advantage of data lakehouses in the supply chain context is their ability to enhance visibility across the entire network. By integrating data from various sources, including inventory levels, transportation logistics, and supplier performance, businesses can gain a holistic view of their supply chain, identifying potential bottlenecks, inefficiencies, and risks in real-time. This real-time visibility is critical for proactive management, allowing for timely interventions and adjustments to maintain optimal flow.

The ability to track and analyze data from various stages of the supply chain, including production, warehousing, and distribution, provides a clear picture of performance and allows for the identification of areas requiring improvement. This enhanced visibility fosters greater agility and responsiveness, enabling businesses to react quickly to changing market conditions and disruptions.

Improving Decision-Making with Data-Driven Insights

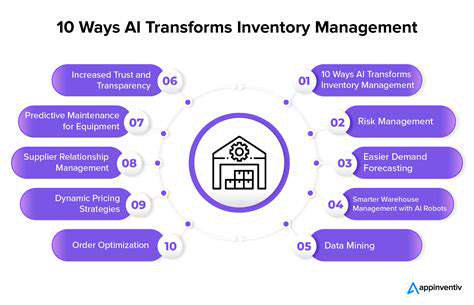

The structured and easily accessible data stored in a data lakehouse empowers businesses with data-driven insights. Complex queries and advanced analytics can be performed on the consolidated data, revealing patterns, trends, and correlations that might otherwise remain hidden. These insights are invaluable for strategic decision-making, allowing companies to optimize inventory management, streamline logistics, and negotiate better deals with suppliers.

Scalability and Cost-Effectiveness of Data Lakehouses

Data lakehouses are designed for scalability, accommodating the ever-increasing volume and velocity of data generated by modern supply chains. This scalability is crucial for adapting to evolving business needs and market demands. Furthermore, the cost-effectiveness of data lakehouses often outweighs traditional data warehousing solutions, particularly when considering the long-term value of improved decision-making and operational efficiency. The ability to manage diverse data types within a single platform streamlines data management and reduces the need for disparate systems.

Leveraging Data Lakehouse for Real-time Supply Chain Monitoring

Data Lakehouse Architecture: A Foundation for Real-time Supply Chain Monitoring

The Data Lakehouse architecture is emerging as a critical component for modernizing supply chain management. Its ability to combine the flexibility of a data lake with the structured querying capabilities of a data warehouse allows organizations to store and process vast amounts of data, ranging from sensor readings and transactional records to external market data and historical trends. This unified approach facilitates real-time insights into supply chain dynamics, enabling proactive decision-making and improved operational efficiency.

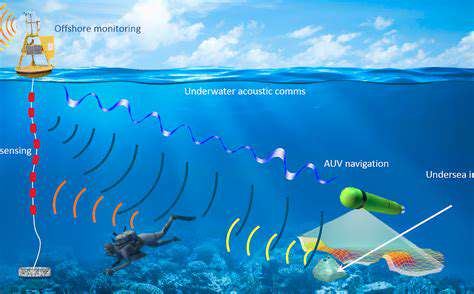

Real-time Data Ingestion and Processing: Key to Supply Chain Visibility

A critical aspect of leveraging the Data Lakehouse for real-time supply chain monitoring is the ability to ingest and process data in near real-time. This involves integrating data streams from various sources, including warehouse management systems, transportation networks, and external market indicators. Advanced technologies like Apache Kafka and Apache Spark can facilitate efficient data streaming, enabling organizations to process data as it's generated.

This high-velocity data processing capability allows for immediate identification of anomalies and disruptions in the supply chain, enabling swift responses and minimizing potential bottlenecks and delays.

Enhancing Supply Chain Resilience Through Predictive Analytics

By storing a comprehensive history of supply chain data within the Data Lakehouse, organizations can leverage advanced analytics to identify patterns and predict potential disruptions. This predictive capability is crucial for building supply chain resilience. The Data Lakehouse's ability to store and process diverse data types enables the development of sophisticated models that forecast potential delays, anticipate demand fluctuations, and identify risks in advance. This proactive approach empowers companies to mitigate potential issues and optimize resource allocation.

Data Exploration and Visualization for Informed Decision-Making

The Data Lakehouse provides a platform for comprehensive data exploration and visualization. This allows supply chain stakeholders to gain insights into various aspects of the supply chain, from inventory levels and transportation routes to supplier performance and customer demand. Interactive dashboards and visualizations can provide a clear and concise overview of the current state of the supply chain, highlighting areas of concern and opportunities for improvement.

Improved Collaboration and Communication Through Data Sharing

A key advantage of the Data Lakehouse architecture is its ability to facilitate data sharing across different departments and stakeholders within an organization. By providing a centralized repository for supply chain data, the Data Lakehouse enables seamless collaboration between procurement, operations, logistics, and sales teams. This improved communication fosters a shared understanding of the supply chain's performance and facilitates more coordinated responses to challenges.

Scalability and Cost-Effectiveness in Data Management

The Data Lakehouse's scalable architecture allows organizations to handle the increasing volume and velocity of data generated by modern supply chains. This scalability ensures that the system can adapt to future growth and changing demands without compromising performance. Furthermore, the Data Lakehouse often proves to be more cost-effective than traditional data warehousing solutions, as it leverages cloud-based storage and processing capabilities, reducing infrastructure costs and operational overhead. This cost-effectiveness is particularly beneficial for organizations seeking to enhance their supply chain monitoring capabilities without significant financial investment.

Before you even begin to search for material suppliers, it's crucial to clearly define your specific needs. What materials do you require? What are the exact specifications, including dimensions, tolerances, and desired properties? Understanding your needs is the first step in finding the right supplier and avoiding unnecessary delays or complications. Thoroughly documenting these requirements ensures you can effectively compare different suppliers and select the best fit for your project.

Advanced Analytics and Machine Learning on Supply Chain Data

Advanced Techniques in Predictive Modeling

Predictive modeling is a crucial aspect of advanced analytics, enabling businesses to anticipate future trends and outcomes. By leveraging historical data and employing sophisticated algorithms, businesses can gain valuable insights into potential market shifts, customer behavior, and operational efficiencies. This allows for proactive decision-making, optimized resource allocation, and ultimately, a more profitable and sustainable business strategy. Predictive models are not static; they require ongoing monitoring and refinement to ensure continued accuracy and relevance in a dynamic environment.

Leveraging Machine Learning Algorithms

Machine learning algorithms are the backbone of advanced analytics, empowering businesses to extract actionable intelligence from vast datasets. These algorithms, ranging from linear regression to deep neural networks, can identify complex patterns and relationships hidden within data that would otherwise go unnoticed. This ability to uncover hidden insights is crucial for businesses seeking to gain a competitive edge and optimize their operations across various departments.

Data Preparation and Feature Engineering

The quality of insights derived from advanced analytics is heavily dependent on the quality of the data used. A critical step is data preparation, which involves cleaning, transforming, and structuring data to ensure its suitability for analysis. This process often includes handling missing values, outliers, and inconsistent formats. Furthermore, feature engineering is essential to extract relevant features from the data, thereby improving the model's performance and predictive accuracy. Properly prepared data is the foundation upon which accurate and insightful models are built.

Deployment and Monitoring of Models

Developing a robust predictive model is only the first step. The model's deployment and ongoing monitoring are equally crucial for maximizing its value. Deployment involves integrating the model into the business processes, ensuring smooth data flow and automated predictions. Continuous monitoring is essential to identify and address any degradation in model performance over time. This proactive approach allows for adjustments to the model as conditions change, maintaining its accuracy and usefulness.

Business Applications and Case Studies

Advanced analytics and machine learning find applications across a wide spectrum of business functions. Examples include customer churn prediction, fraud detection, demand forecasting, and personalized marketing campaigns. Examining case studies of successful implementations demonstrates how these technologies can drive tangible improvements in business performance. Analyzing past successes provides valuable insights and best practices for future implementations. Furthermore, understanding the context of the application helps to ensure that the model is tailored to the specific needs and goals of the business.

Data Security and Governance in the Data Lakehouse Environment

Data Security Considerations in a Data Lakehouse

Implementing robust data security measures is paramount in a data lakehouse environment, as it involves integrating data from various sources, potentially including sensitive information. This necessitates a multi-layered approach encompassing access control, encryption, and data masking. Properly configuring access controls to limit data visibility based on user roles and responsibilities is crucial. This granular control ensures that only authorized personnel can access specific data subsets, mitigating the risk of unauthorized disclosure or modification. Furthermore, encrypting data both in transit and at rest is vital to protect sensitive information from potential breaches. This involves choosing appropriate encryption algorithms and implementing secure key management practices. Implementing data masking techniques, such as anonymization or pseudonymization, can further safeguard sensitive data while still allowing for analysis and insights to be derived.

Data loss prevention (DLP) policies are essential to prevent sensitive data from leaving the data lakehouse environment without proper authorization. These policies should be tailored to the specific types of data stored and the potential risks associated with each dataset. Regular security audits and vulnerability assessments are vital for identifying and addressing potential security gaps. Proactive monitoring of data access patterns and activity logs can help detect suspicious behavior and respond swiftly to potential security threats. A robust incident response plan should be in place to manage and mitigate any security incidents, ensuring minimal disruption and data loss.

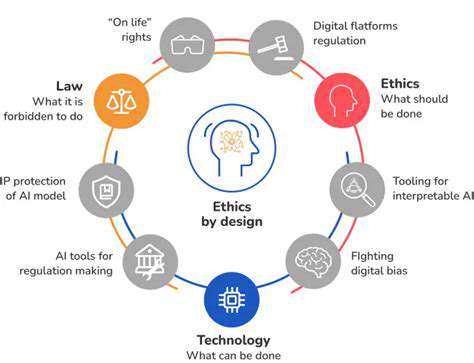

Governance Frameworks for Data Lakehouse

Establishing clear governance frameworks for data within the data lakehouse is critical for ensuring data quality, consistency, and compliance. These frameworks should outline policies for data ingestion, storage, processing, and access, ensuring that data is handled responsibly and ethically. A well-defined data cataloging system is essential to provide a comprehensive inventory of data assets, their characteristics, and usage patterns. This enables data stewards to understand the data landscape, track its lineage, and ensure its usability for various analytical purposes.

Data quality rules and standards should be defined and enforced to maintain the accuracy, completeness, and consistency of data within the lakehouse. This involves defining specific quality metrics, establishing validation procedures, and implementing automated checks to identify and rectify data issues. Data lineage tracking is crucial to understand the origin and transformations of data throughout its lifecycle, enabling better data governance and accountability. This helps in tracing the source of errors or inconsistencies and maintaining compliance with regulations.

Data Lifecycle Management in a Data Lakehouse

Effective data lifecycle management (DLM) is essential for optimizing the data lakehouse's performance and reducing storage costs. This encompasses the entire journey of data, from ingestion to archiving or deletion, ensuring that data is stored in the most appropriate format and location throughout its lifecycle. Data retention policies are critical to ensure compliance with regulations and business requirements while minimizing storage costs. These policies must define the timeframe for retaining various types of data and establish procedures for archiving or deleting data that is no longer needed.

Properly designed data storage tiers can optimize storage costs by moving less frequently accessed data to less expensive storage options. This involves categorizing data based on its access frequency and implementing automated workflows to migrate data between tiers as needed. Metadata management plays a crucial role in enabling efficient data discovery and retrieval. Well-organized metadata allows users to easily locate and understand the data they need, significantly improving data utilization and reducing wasted time searching for relevant information. Version control and data backups are also critical aspects of DLM to ensure data integrity and recoverability in case of data loss or corruption. Implementing these elements effectively safeguards the entire data lifecycle.