Data Lakehouse Architecture for Supply Chain Analytics

Enabling Real-time Decision Making in Supply Chains

Real-time Data Acquisition

Gathering data in real-time is crucial for enabling real-time decision-making. This involves implementing robust data pipelines that capture information as it occurs, ensuring minimal latency. Accurate and timely data ingestion is the foundation for effective analysis and subsequent actions. Data sources can range from sensors and IoT devices to transactional systems and CRM platforms.

Effective real-time data acquisition requires careful consideration of data volume, velocity, and variety. Systems must be designed to handle high-throughput data streams efficiently, minimizing delays and ensuring data integrity throughout the process. Choosing the right technology stack is paramount for maintaining a high degree of reliability.

Data Processing and Transformation

Raw data, while essential, often requires significant processing and transformation before it can be analyzed for actionable insights. This stage involves cleaning, filtering, and aggregating data to remove inconsistencies and noise. Data transformation also involves converting data into a format suitable for analysis and visualization.

Implementing efficient algorithms and techniques is critical in this stage. This includes streamlining data pipelines for optimal performance and handling various data formats and structures. Data integrity and accuracy must be maintained throughout the processing stages.

Real-time Analytics

Advanced analytics techniques are essential for extracting insights from real-time data streams. This includes using statistical models, machine learning algorithms, and predictive analytics to identify patterns, anomalies, and trends. Real-time analytics empowers decision-makers to react swiftly to changing circumstances.

Implementing scalable and robust analytical platforms is crucial to handle large volumes of real-time data. These platforms should be designed to provide quick insights and support various analytical methods.

Decision Support Systems

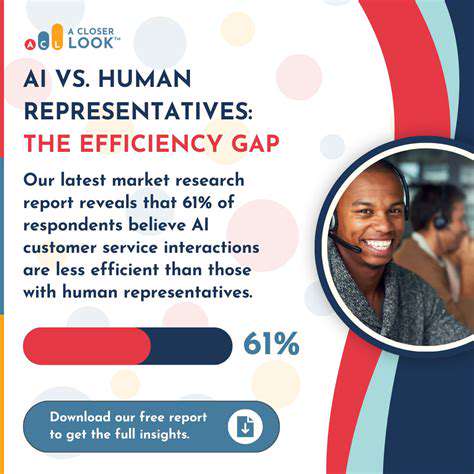

Real-time decision-making often relies on sophisticated decision support systems. These systems integrate data analysis results with business rules and expert knowledge to generate recommendations for action. A well-designed decision support system empowers individuals and teams to make informed decisions quickly and efficiently.

The system should also provide clear visualizations and dashboards that present key performance indicators (KPIs) and other relevant metrics in an easily understandable format.

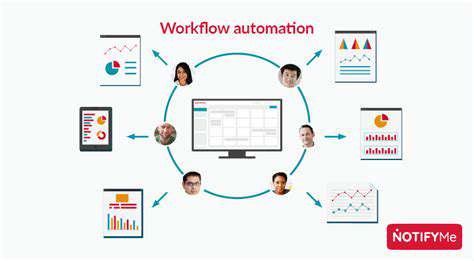

Alerting and Notification Mechanisms

Effective real-time decision-making necessitates timely alerts and notifications. These mechanisms should be triggered by specific events or conditions, providing immediate notification to the relevant stakeholders. Prompt alerts can prevent potential issues or capitalize on opportunities.

User Interface and Experience

A user-friendly interface is essential for effective real-time decision-making. Decision-support tools should provide intuitive dashboards and visualizations that enable quick comprehension of key metrics and insights. The user interface should be adaptable and customizable to meet the specific needs of different users.

The system should also allow for customization of alerts and notifications to ensure that users receive only relevant information. Streamlined access to actionable insights is key for improving decision-making speed and quality.

Integration and Scalability

Real-time decision-making systems must be seamlessly integrated with existing business systems and processes. This ensures that data flows smoothly and that decisions are based on a complete picture of the business environment. Integration should minimize disruptions to existing operations.

The system should be designed for scalability, capable of handling increasing volumes of data and user requests as the business grows and evolves. Scalability ensures the system can adapt to changing business needs.

Data Lakehouse Benefits for Supply Chain Agility

Improved Data Visibility and Accessibility

A data lakehouse architecture empowers supply chain teams with unparalleled visibility into their data. By centralizing data from various sources, including operational systems, logistics platforms, and customer interactions, the lakehouse provides a holistic view of the entire supply chain. This unified view allows for real-time insights into inventory levels, order fulfillment progress, transportation statuses, and potential bottlenecks, enabling proactive decision-making and improved responsiveness to changing market conditions.

The accessibility of this consolidated data is a significant advantage. Supply chain professionals can easily access and analyze the data they need, regardless of its format or origin. This democratization of data empowers individuals across the supply chain to make informed decisions, leading to faster problem-solving and more efficient workflows.

Enhanced Data Quality and Consistency

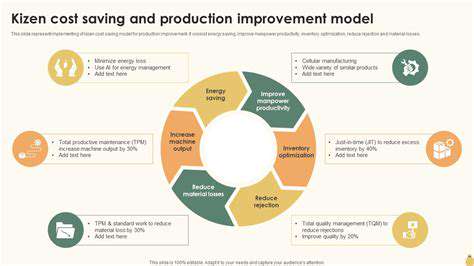

Data quality is paramount in a dynamic supply chain. A data lakehouse architecture facilitates the standardization and validation of data from disparate sources, leading to greater consistency and reliability. Data cleansing and transformation processes become more streamlined, minimizing errors and ensuring that the insights derived from the data are accurate and trustworthy.

By establishing data governance policies within the lakehouse environment, organizations can ensure data accuracy and integrity. This ensures that all stakeholders are working with the same, reliable information, contributing to a more unified and efficient supply chain operation. This ultimately reduces the risk of costly errors and delays.

Real-time Analytics for Proactive Decision Making

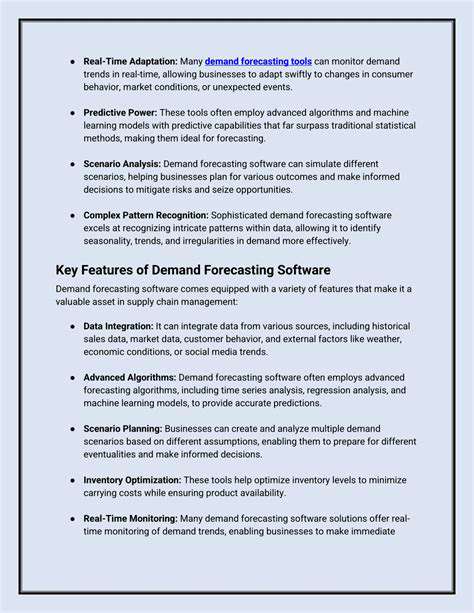

A data lakehouse enables real-time analytics by allowing for the integration of data streams directly into the analytical processes. This capability offers a significant advantage in a supply chain where speed and responsiveness are crucial. By analyzing data in real-time, supply chain managers can quickly identify emerging trends, predict potential disruptions, and react proactively to changing market conditions or unexpected events.

Real-time analytics can be leveraged to optimize inventory management, predict demand fluctuations, and proactively address potential supply chain bottlenecks. The ability to react swiftly to these challenges ensures that the supply chain remains agile and resilient in the face of unforeseen circumstances.

Streamlined Collaboration and Data Sharing

A data lakehouse facilitates seamless collaboration and data sharing among various stakeholders within the supply chain. The centralized data repository allows different teams, including procurement, logistics, operations, and sales, to access and analyze the same data, fostering a unified understanding of the overall supply chain performance.

This improved collaboration leads to better communication, faster decision-making, and more coordinated responses to challenges. The sharing of critical data fosters a culture of transparency and knowledge sharing across departments, which is essential for maintaining supply chain agility and resilience in today's complex and rapidly evolving market.

Read more about Data Lakehouse Architecture for Supply Chain Analytics

Hot Recommendations

- AI for dynamic inventory rebalancing across locations

- Visibility for Cold Chain Management: Ensuring Product Integrity

- The Impact of AR/VR in Supply Chain Training and Simulation

- Natural Language Processing (NLP) for Supply Chain Communication and Documentation

- Risk Assessment: AI & Data Analytics for Supply Chain Vulnerability Identification

- Digital twin for simulating environmental impacts of transportation modes

- AI Powered Autonomous Mobile Robots: Enabling Smarter Warehouses

- Personalizing Logistics: How Supply Chain Technology Enhances Customer Experience

- Computer vision for optimizing packing efficiency

- Predictive analytics: Anticipating disruptions before they hit